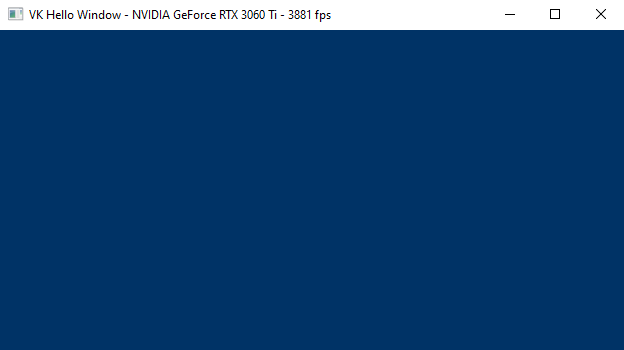

vk01.A - Hello Window

by P. A. Minerva

1 - Introduction

This section provides an overview of the most importan Vulkan concepts. It includes information that is already available in the official documentation and other resources. Observe that this section can be quite dense with information, and may contain some references that require some prior knowledge. Therefore, I also included basic computer graphics concepts (rendering pipeline, stages, shaders, etc.) to help keep this section as self-contained as possible. However, if you still have doubts, I recommend coming back to this section after going through the next tutorial, where general computer graphics concepts will be explained in detail from scratch.

1.1 - What is Vulkan?

Vulkan is an API Specification created and maintained by The Khronos Group, an open consortium of leading hardware and software companies. The Vulkan specification outlines a low-level API that is designed to expose the GPU to application developers with a minimal level of abstraction provided by the device driver. This allows Vulkan applications to take advantage of lower CPU overhead, reduced memory usage, and increased performance stability.

For a GPU to be compatible with Vulkan, it requires a hardware vendor-supplied driver that maps Vulkan API calls to the hardware, which of course must be capable of executing the corresponding operations. NVIDIA, AMD, and Intel offer their Vulkan implementations through driver update packages for a variety of GPU architectures and platforms, including Windows, Linux, and Android.

The Khronos Group provides C99 header files that are derived from the Vulkan Specification, and can be used by developers to interface with a hardware-specific implementation of the Vulkan API. Additionally, multiple language bindings are available for developers who do not work with C code.

1.2 - Why Vulkan?

You may be wondering why The Khronos Group didn’t just release a new version of the OpenGL API and why you should choose Vulkan over OpenGL.

To answer the first question, the fact is that after the launch of Vulkan, a new version of OpenGL was indeed released. However, OpenGL and Vulkan offer the same functionality but with different levels of abstraction in their communication with the GPU. The differences in design between OpenGL and Vulkan are so significant that updating OpenGL without fundamentally changing it was nearly impossible. Hence, Vulkan was created.

Regarding the second question, Vulkan is a low-level API that provides applications with a lot of power, but in return it also requires applications to take on a lot of responsibility in ensuring proper functionality. Before you begin learning or working with Vulkan, it’s important to evaluate its pros and cons to determine if it’s the right choice for your projects.

1.2.1 - Advantages

Despite being more difficult to learn and use, Vulkan still brings some advantages compared to OpenGL, as outlined in the following sections.

State management

OpenGL uses a single global state which is known at draw time, meaning that it can be challenging and/or costly to implement certain optimizations.

The term “state” refer to the set of information the GPU needs to execute its work. It specifies the behaviour\setup of every stage in the pipeline when we are going to draw something: shader code (that is, the code the GPU executes for programmable stages), input and output resources (of the pipeline stages), primitive topology (that is, if you want to draw points, lines, or triangles), etc.

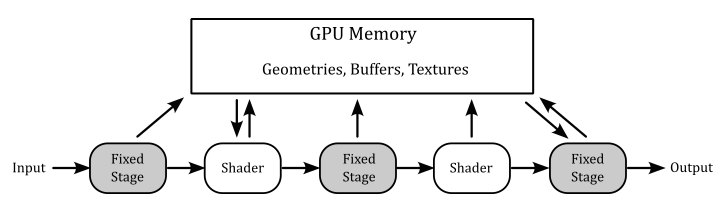

Graphics operations are performed through a rendering pipeline composed of stages. Some of these stages are called shaders and are programmable, which means we can set the tasks performed by writing special programs (shader code) to run on GPU cores for programmable stages.

Other stage are fixed, which means they perform predefined operations. However, we can still configure fixed stages to set how they should perform their tasks.

Each stage outputs its results as input of the next stage. Additionally, memory resources (such as buffers and textures) can be accessed in GPU memory by pipeline stages to perform their tasks. However, if programs executed by programmable stages make use of memory resources, we need to bind resource variables in the shader code to actual resources in memory.

Additional details will be provided in the next tutorial.

Vulkan uses pipeline objects to store used states ahead of time, allowing more predictable application of shader-based optimizations and reducing runtime costs.

This difference results in a notable decrease in the CPU overhead of graphics drivers, but it requires applications to determine the necessary states upfront to construct the state objects and benefit from the reduced overhead.

API execution model

OpenGL uses a synchronous rendering model, which implies that each API call has to act as if all prior API calls have already been executed. However, in practice no modern GPU works in this way, as rendering workloads are processed asynchronously. The synchronous model is just an illusion maintained by the device driver. To maintain this illusion, the driver has to monitor which resources are being read or written by each rendering operation, guarantee that the workloads are carried out in a valid order to avoid rendering issues, and ensure that API calls which need a data resource wait until that resource is safely available.

Vulkan uses an asynchronous rendering model that mirrors how the modern GPUs work. Applications queue rendering commands into a queue, manages the execution order of workloads through explicit scheduling dependencies, and explicitly synchronize access to resources.

This difference results in a notable decrease in the CPU overhead of graphics drivers, but it requires applications to handle command recording and queuing, and resource synchronization.

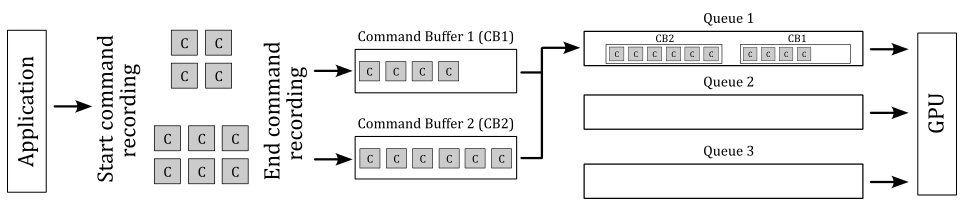

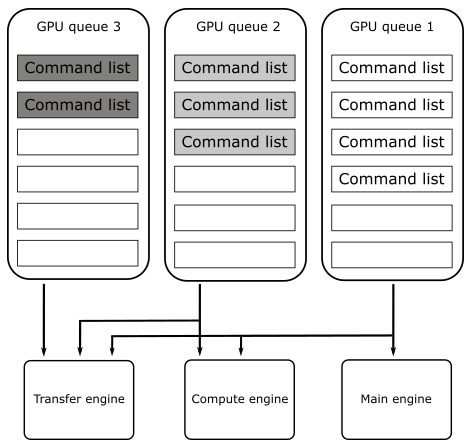

GPUs have queues to store list of commands called command buffers submitted by applications. The command buffer is the GPU unit of execution. That is, a GPU doesn’t execute single commands sent from the application, as suggested by the synchronous model exposed by OpenGL. On the other hand, the execution model of Vulkan is asynchronous in the sense that CPU (through a Vulkan application) and GPU have different timelines (that is, the time when they execute something). For example, a Vulkan application can record graphics commands into command buffers and submitting them into a queue (CPU timeline). Then, this queue is read by the device (GPU) that executes the command buffers (GPU timeline). Also, several command buffers can be built simultaneously in parallel using multiple threads in an application. The following diagram shows a simplified representation of this execution model.

In the image above, the application records two command buffers containing several commands. These commands are then submitted to one or more queues depending upon the workload nature. The device accesses its queues to process command buffer workloads and either displays them on the output display or returns them to the application for further processing.

API threading model

OpenGL uses a single-threaded rendering model, which greatly restricts applications from using multiple CPU cores to parallelize rendering operations.

Vulkan uses a multi-threaded rendering model, which allows applications to parallelize rendering operations across multiple CPU cores.

This difference allow Vulkan applications to benefit from systems with a multi-core CPU.

API error checking

OpenGL use extensive run-time error checking which increases driver overheads in all applications.

Vulkan does not require the driver to implement runtime error checking. This implies that incorrect use of the API may result in rendering corruption or application crashes. Instead of the constant error checking of OpenGL, Vulkan offers a framework that permits layer drivers to be placed between the application and the native Vulkan driver. These layers can introduce error checking and other debugging functionality and have a significant advantage since they can be removed when not needed.

This difference results in a notable decrease in the CPU overhead of graphics drivers, at the expense of making many errors undetectable unless a layer driver is used.

Memory allocation

OpenGL uses a client-server memory model. This means the application (the client) cannot directly allocate or manage the memory backing GPU (the server) resources. The driver manages all of these resources individually using internal memory allocators, and synchronizes resources between client and server.

Vulkan is designed to give the application more direct control over memory resources, how they are allocated, and how they are updated.

This difference results in a notable decrease in the CPU overhead of graphics drivers, and gives the application more control over memory management. The application can further reduce CPU load: for example, by grouping objects with the same lifetime into a single allocation and tracking them collectively instead of tracking them individually.

Memory usage

OpenGL uses a typed object model, which tightly couples a logical resource with the physical memory which backs it. While this model is easy to use, it prevents reusing the same physical memory for different resources (whenever possible).

Vulkan separates the concept of a resource from the physical memory which backs it. This makes it possible to reuse the same physical memory for multiple different resources at different points in the rendering pipeline.

The ability to alias memory resources can be used to reduce the total memory footprint of the application by recycling the same physical memory for multiple uses at different points in a frame creation.

1.2.2 - Disadvantages

One of the major drawbacks of Vulkan is that it places a significant amount of responsibility on the application, including memory allocation and resource synchronization. While this provides a greater level of control and customization, it also increases the risk of suboptimal application behavior, resulting in decreased performance.

1.2.3 - Conclusions

The primary benefits of using Vulkan are associated with a lower CPU overhead, which is attributed to the minimal level of abstraction provided by the Vulkan drivers. It is important to note that Vulkan does not necessarily guarantee a performance enhancement. The GPU hardware remains the same, and the rendering capabilities made available by Vulkan are similar to those offered by OpenGL. If your application’s performance is restricted by GPU rendering speed, it is improbable that Vulkan will result in improved performance.

However, If you’re looking for an API that better exposes how GPUs really work, with an high degree of control over resource management, and available for cross-platform (both Desktop and mobile), multi-threaded applications, then you should definitely consider using Vulkan.

Applications which use Vulkan well can take advantage of multi-threading, and benefit from reduced CPU load and memory footprint, as well as smoother rendering with fewer hitches caused by thicker driver abstractions.

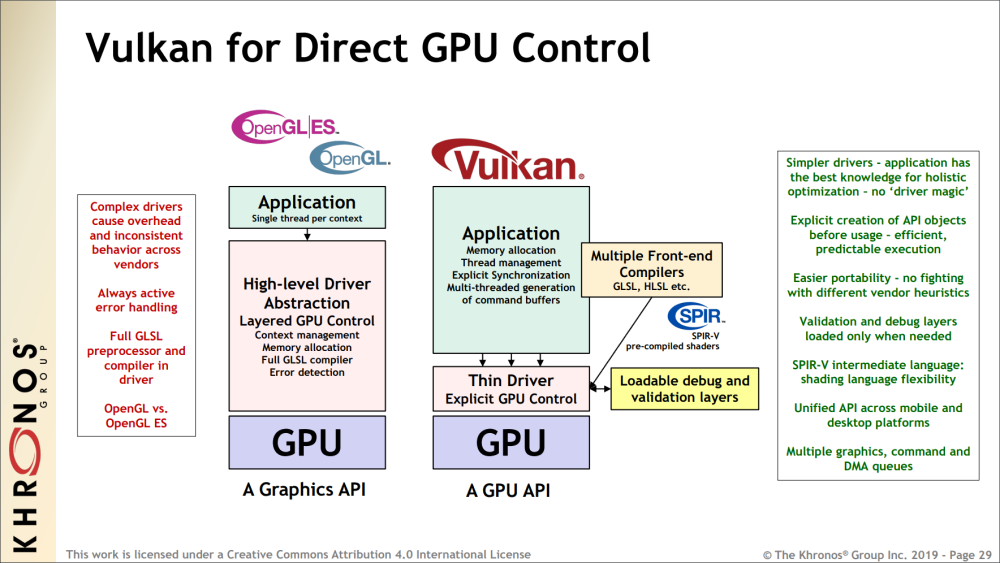

In the image above you can also see that OpenGL includes a complete shader compiler in the driver, which increases the level of abstraction and complexity of the driver, leading to additional CPU overhead.

The predicable execution specified in the image refers to the fact that, by using Vulkan, we can set up the rendering work ahead of time, which allows for better holistic (overall) optimization by the driver.

Vulkan is an explicit API with a thin driver abstraction, making it easier to develop and port to different platforms. This also means that vendor implementations don’t have to rely on advanced heuristics to guess what the application is trying to do.

SPIR-V is a binary intermediate representation for shader programs. In Vulkan, you can still write the shader code for programmable stages in a high-level shading language such as GLSL or HLSL, but a SPIR-V binary representation is needed when setting the pipeline state. Since it is pre-compiled, the driver can easily convert SPIR-V binary blob to shader machine (GPU) code.

1.3 - Important Vulkan concepts and components

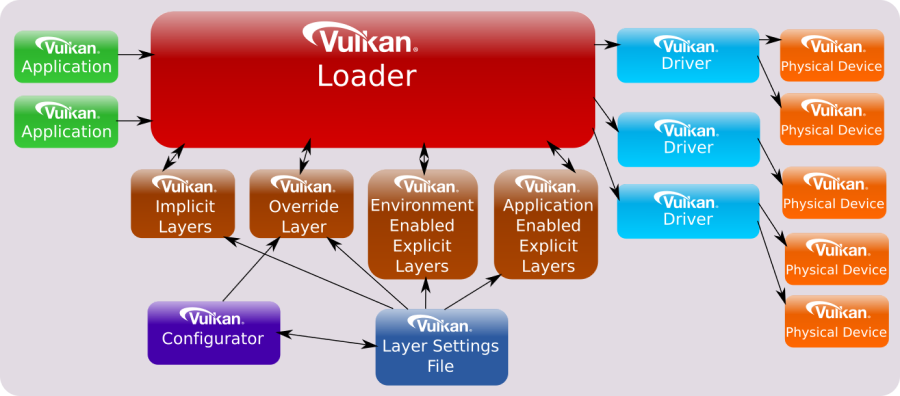

Vulkan defines a layered API in a layered architecture.

The components of the layered architecture are:

-

Vulkan Applications

-

The Vulkan Loader

-

Vulkan Layers

-

Drivers

-

VkConfig

These component interact with the Vulkan API by using, implementing, extentind, or interpcepting its functions. The Vulkan API is designed in a layered manner, which means that applications can use a subset of its functions that all Vulkan implementation have to support as part of the Vulkan core specification. Then, applications can also use additional layers above the core one for debugging, validation, and other purposes.

1.3.1 - Vulkan Applications

To use the functionality specified in the Vulkan specification, applications use the function prototypes included in the header files provided by the Khronos Group. These files can be found in the Vulkan-Headers repository on GitHub, or in the Vulkan SDK. Vulkan functions are somewhat defined in the Vulkan Loader module (more on this shortly), which is installed via driver update packages or the Vulkan SDK. Alternatively, the Vulkan loader can be compiled from source code. Anyway, linking the Vulkan loader module is required for Vulkan applications.

Generally, a Vulkan application uses the Vulkan API to perform the following sequence of tasks:

- Initialize the hardware and software (to detect the available devices and let the loader start its job).

- Create a presentation surface using a Window System Integration (WSI) extension.

- Set the pipeline state.

- Create resources to bind to the stages of the pipeline using descriptors.

- Record commands in command buffers in a render loop.

- Submit the command buffers to GPU queues for processing.

We will cover each of the above issues in detail, both in this tutorial and in upcoming ones.

Direct Exports

The Vulkan loader module exports all core entry-points and some additional platform-specific functions (more on this shortly). Therefore, most of the time we can simply link the loader and call the Vulkan functions using the prototypes declared in the related header files.

On Linux, using GCC, we can link the Vulkan loader with the following command, which links “libvulkan.so”. This file is a symbolic link to “libvulkan.so.1”, which in turn is a symbolic link to the latest installed Vulkan loader (e.g., “libvulkan.so.1.0.42.0”, which is the actual shared library).

g++ main.cpp -o main.out -lvulkan

On Windows, we can implicitly link the Vulkan loader with the help of the related import library “vulkan-1.lib”. This file includes a table where each element stores the name of a function and the module where to find the related definition. An import library simply tells the linker not to panic if it cannot find a definition, because the Windows loader will be able to dynamically resolve\fix it at run-time by loading the related shared module (“vulkan-1.dll”, which is just a copy to the latest Vulkan loader installed (e.g. “vulkan-1-999-0-0-0.dll”).

Dynamic linking

Alternatively, we can load the Vulkan Loader module at runtime with dlopen (Linux) or LoadLibrary (Windows). Then, we only need to query the addresses of vkGetInstanceProcAddr and vkGetDeviceProcAddr with dlsym (Linux) or GetProcAddress (Windows). At that point, with vkGetInstanceProcAddr and vkGetDeviceProcAddr we can query the addresses of other Vulkan functions.

In the next section we will see what’s the difference between using direct exports and dynamic linking, as well as the difference between vkGetInstanceProcAddr and vkGetDeviceProcAddr.

Static linking was possible in the past, but it is no longer an option now.

1.3.2 - Vulkan Loader

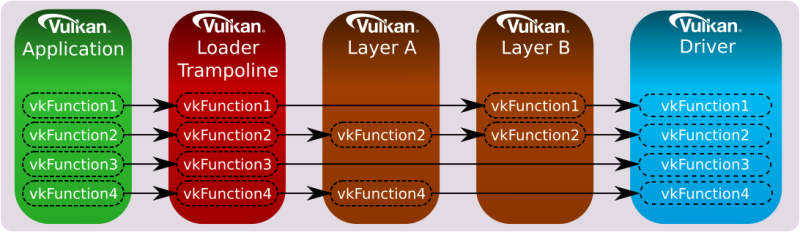

It was previously stated that the loader somewhat define Vulkan functions, but what exactly does it means? Well, you can think of the definitions in the loader as trampolines that redirect application calls to the appropriate implementations in the graphics drivers, passing through an arbitrary number of layers.

The loader can insert optional layers between the application and the drivers, which can offer specialized functionality. The loader is essential for ensuring that Vulkan functions are dispatched to the correct set of layers and drivers. By inserting layers into a call-chain, the loader can enable them to process Vulkan functions before the driver is invoked.

To understand how the loader directs API calls, it is necessary to have a basic knowledge of certain key concepts. The most important one is that many objects and functions in Vulkan can be separated into two groups:

-

Instance-specific

-

Device-specific

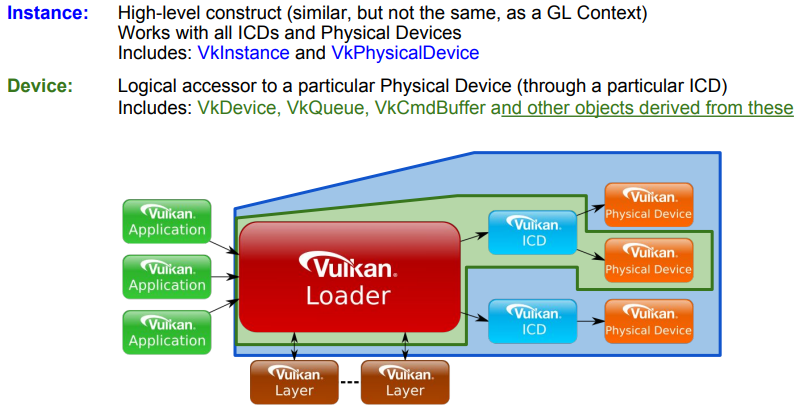

“Instance-specific” refers to functions or objects that can be used to provide system-level information and functionality (i.e., something that can be applied to all available GPUs, and their drivers, installed on a user’s system).

“Device-specific” means something which applies to a particular physical device on the user’s system.

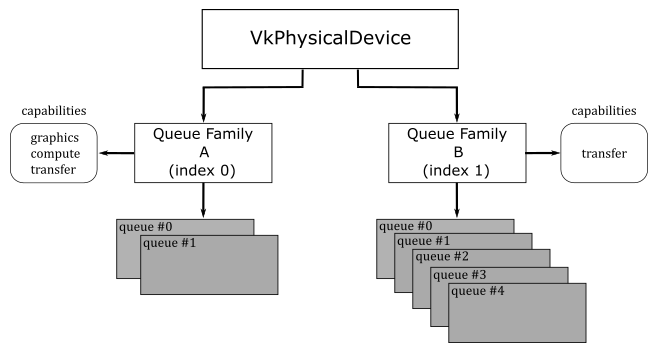

Examples of instance objects are VkInstance and VkPhysicalDevice. We will use these objects as interfaces to call instance functions. Instance functions are functions that take either an instance object as their first parameter, or nothing at all.

Examples of device objects are VkDevice VkQueue, and VkCommandBuffer. We will use these objects as interfaces to call device functions. Device functions are functions that take a device objec as their first parameter.

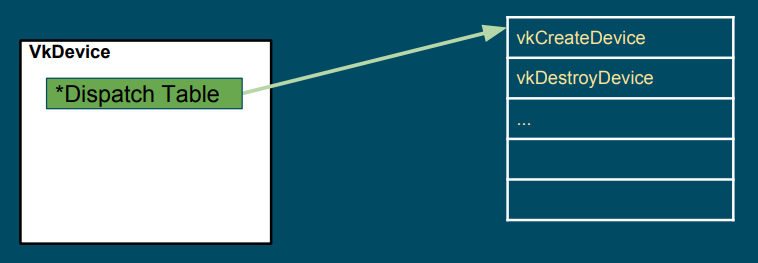

VkInstance, VkPhysicalDevice, VkDevice VkQueue, and VkCommandBuffer are called dispatchable objects, which means they contain a pointer to a dispatch table. A dispatch table is an array of function pointers (including core and possibly extension functions) used to step to the next entity in a call chain. An entity could be the loader, a layer or a driver (more on this shortly).

The loader maintains two types of dispatch tables: instance specific, created during the call to vkCreateInstance (which creates a VkInstance), and device specific, created during the call to vkCreateDevice (which creates a VkDevice). Thus, the loader builds an instance call chain for each VkInstance that is created, and a device call chain for each VkDevice that is created (that is, the actual instance function called depends on the VkInstance object passed as its first parameter, which points to its own dispatch table; the same applies to device functions). The pointer to the dispatch table stored in VkPhysicalDevice, VkQueue, and VkCommandBuffer is a duplicate of the pointer from the existing parent object of the same level (VkInstance versus VkDevice). For example, the dispatch table pointer in a VkPhysicalDevice object is a duplicate of the pointer in the corresponding VkInstance object, while the dispatch table pointer in a VkQueue or VkCommandBuffer object is a duplicate of the pointer in the corresponding VkDevice object.

At the time of calling vkCreateInstance or vkCreateDevice, the application and the system can each specify optional layers to be included. The loader will initialize the specified layers to create a call chain for each Vulkan function, and each entry of the dispatch table in the first entity of the call chain will point to the corresponding entry in the next entity of the same chain. Further information will be provided in the next section.

After an application makes a Vulkan function call, this is usually directed to a trampoline function in the loader. Trampoline functions are small, simple functions that jump to the appropriate dispatch table entry, which is located in the loader, layer, or device driver, depending on the pointer to the dispatch table of the dispatchable object passed as the first parameter. Then, the first dispatch table entry can point to the corresponding entry in the next entity in the call chain (layer or device driver), which calls the corresponding entry in the next entity, and so on.

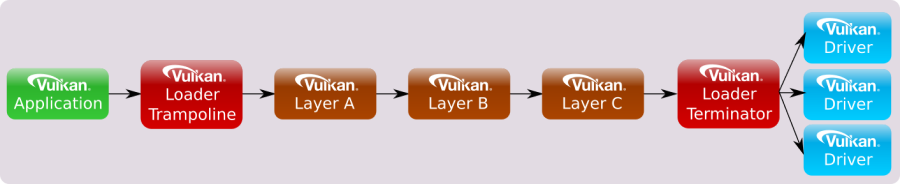

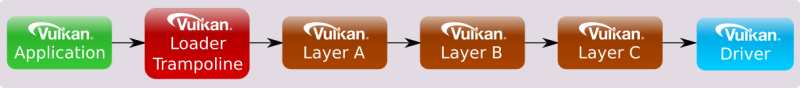

Additionally, for functions in the instance call chain, the loader has an additional function called a terminator. This function is called after all enabled layers to marshal the appropriate information to all available drivers.

Device call chains are generally simpler because they deal with only a single device. As a result, the specific driver that exposes this device can always be the terminator of the chain.

The functions exported by the loader are trampolines to function pointers stored in the dispatch tables mantained by the loader itself.

However, it is possible to set up a custom dispatch table by querying instance functions using vkGetInstanceProcAddr, and device functions using vkGetDeviceProcAddr. The main difference between the two is that vkGetInstanceProcAddr may return the address of the same trampoline function exported by the loader, whereas vkGetDeviceProcAddr returns the actual function pointer stored in the dispatch table of the loader, whenever possible. This can optimize the call chain even further by removing the loader altogether in most scenarios:

In this tutorial series, we will use the function prototypes declared in the header files provided by the Khronos Group to directly call the functions exported by the loader. However, we will also use vkGetInstanceProcAddr and vkGetDeviceProcAddr to query the addresses of instance and device functions exposed but not exported by the loader.

At this point, it can be explained why a physical device (vkPhysicalDevice) is an instance object (instead of a device object). A physical device can be considered a stand-alone object representing a generic GPU installed on the user’s system. On the other hand, a device object is considered as a logical accessor to a particular physical device through a particular ICD (driver). Indeed, VkDevice objects are also called logical device objects. Multiple logical devices can be created from the same physical device, each with its own state and resources independent of other logical devices.

Extensions

Extensions in Vulkan refer to additional functionalities that can be provided by a layer, the loader, or a driver to extend the core Vulkan functionality. They can introduce new features that may be experimental, specific to a particular platform or device, or come with a performance cost. Consequently, extensions are not enabled by default and must be explicitly enabled by an application before they can be used. This approach ensures that applications only pay for the functionalities they need, and that the use of extensions is intentional, which helps improve performance and portability.

Extensions can introduce new functions, enums, structs, or feature bits to enhance the core functionality of Vulkan. While the related definitions are included in the Vulkan Headers by default, using them without enabling the corresponding extensions can result in undefined behavior. Therefore, to ensure correct and predictable behavior, applications must explicitly enable the required extensions before using any extended Vulkan functionality.

Vulkan extensions are categorized based on the type of functions they offer. This categorization results in extensions being divided into instance or device extensions where the majority of functions in the extension correspond to the respective type. For instance, an “instance extension” mainly comprises “instance functions,” which accept instance objects as their first parameter to introduce new general functionality accessible to the whole system. On the other hand, a “device extension” mainly includes “device functions,” which accept device objects as their first parameter to provide new functionality to a particular device.

Usually, Vulkan extensions include an author prefix or suffix modifier to every structure, enumeration entry, command entry-point, or define that is associated with it. For example, the “KHR” prefix indicates Khronos-authored extensions, and it can also be found on structures, enumeration entries, and commands associated with those extensions. The “EXT” suffix is used for multi-company authored extensions, while “AMD,” “ARM,” and “NV” are used for extensions authored by AMD, ARM, and Nvidia, respectively.

Windows System Integration (WSI) extensions are well known Vulkan extensions targeting a particular Windowing system and designed to interface between the Windowing system and Vulkan. Some WSI extensions are valid for all windowing system, but others are particular to a given execution environment. The loader only enables and directly exports those WSI extensions that are appropriate to the current environment (Windows, Linux, etc.).

It’s worth noting that although the loader can export entry points directly for these extensions, an application can use them only under certain conditions:

- At least one physical device must support the extension(s)

- The application must use such a physical device when creating a logical device

- The application must request the extension(s) be enabled while creating the instance or logical device (this depends on whether or not the given extension works with an instance or a device)

Only then the WSI extension can be properly used in a Vulkan program.

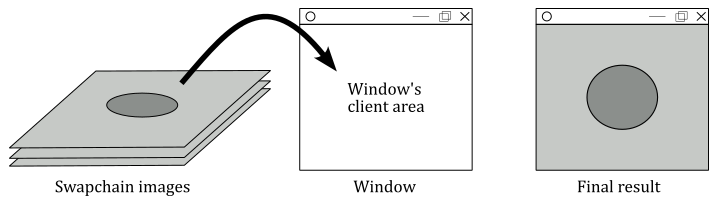

Showing the results of rendering operations on the screen is a fundamental operation. So, why is it provided as an extension instead of a core functionality? Well, there are at least two reasons:

Vulkan is a platform-agnostic API, and each platform may use different window systems that interact with the operating system in different ways. Therefore, Vulkan cannot provide a generic interface that works for all window systems on all platforms.

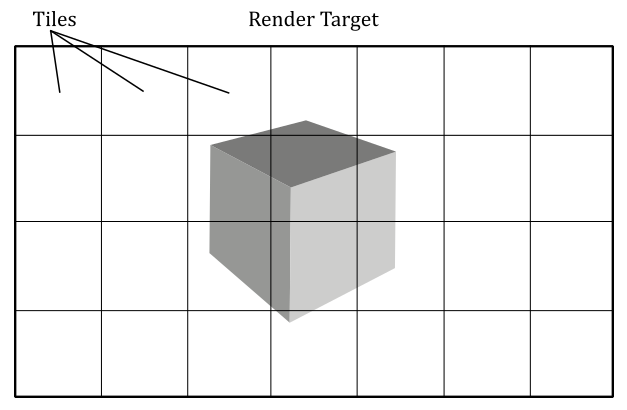

A Vulkan application is not obligated to show the result of graphics operations on the screen. Instead, the specification states that we must store the result on a render target (usually a texture). Then, we can save the render target to a disk file or display it to the user on the screen. Additionally, rendering to a render target is a platform-specific operation that depends on the window system.

As Vulkan can be easily expanded, there may be extensions that the loader is not aware of, but that it still needs to create. In such cases, if the extension is a device extension, the loader will pass the unknown entry-point down the device call chain until it reaches the appropriate driver entry-points (more on this in the next section). The same will happen if the extension is an instance extension that takes a physical device as its first parameter.

However, for all other instance extensions the loader will fail to dispatch the call. The reason is that the loader need information on the physical devices that support the extension (it can’t simply pass the call to all the device drivers available in the system in the hope that the extension is supported by all of them).

At times, enabling an extension may also require enabling an optional device feature. Vulkan features define functionality that may not be supported on all physical devices. If a device advertises support for a feature, it still needs to be enabled (similar to an extension). However, once enabled, the feature becomes core functionality instead of an extension.

the difference between features and extensions is that features refer to functionality that may be supported by a physical device, whereas extensions refer to functionality that is either implemented by a Vulkan implementation or can be injected into the call chains (instance or device) by loading the appropriate Vulkan layers.

1.3.3 - Vulkan Layers

Applications desiring Vulkan functionality beyond what Vulkan drivers on their system already expose, may use various layers to augment the API. Each layer can choose to hook (intercept) Vulkan functions to be inspected, augmented or simply ignored. However, any function a layer does not hook is skipped for that layer, and the control flow will continue on to the next layer, or driver. As a result, a layer has the option to intercept all Vulkan functions, or only a specific subset that it finds relevant.

Vulkan layers allow applications to use additional functionalities, mainly for development purposes. For example:

-

Validating API usage

-

Tracing API calls

-

Debugging aids

-

Profiling

-

Overlay

Layers are unable to introduce new Vulkan core API entry-points that are not already available in the vulkan.h header file. However, they can provide implementations of extensions that introduce additional entry-points that are beyond what is available without those layers. These extra extension entry-points can be accessed through specific extension header files.

Layers are packaged as shared libraries the loader can find and dynamically load using some manifest files (data files in JSON format) installed by the Vulkan SDK. Since layers are optional and loaded dynamically, they can be enabled or disabled based on requirements. During application development and debugging, enabling certain layers can help ensure the proper usage of the Vulkan API. However, when releasing the application, those layers become redundant and can be disabled, resulting in a faster performance of the application.

Layers can be classified into two categories:

-

Implicit Layers

-

Explicit Layers

Explicit layers need to be explicitly enabled by an application.

Implicit layers are enabled by default, unless an additional manual enable step is required. These layers have an additional requirement compared to explicit layers, as they need to be disabled by an environmental variable. This is because implicit layers are not visible to the application and may potentially create issues. That way, users have the option to disable an implicit layer if it causes problems.

On any system, the loader searches specific areas for information about the layers that it can load, both implicitly and explicitly. That is why it is crucial to install the Vulkan SDK as it configures the system by generating the necessary keys (in the Windows registry) and files that contain information about where to locate the layers, or more precisely, the shared libraries that implement them.

Please note that layers are typically not enabled when releasing an application. Hence, users do not require installing the Vulkan SDK. They only need to have the Vulkan Loader and drivers installed through the vendor driver update packages.

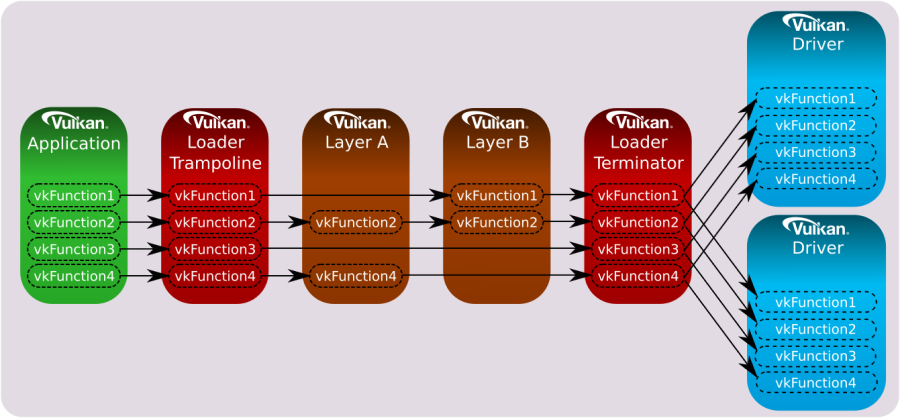

A layer has the capability to intercept instance functions, device functions, or both. To intercept instance functions, a layer must participate in the instance call chain. To intercept device functions, a layer must participate in the device call chain. However, as mentioned earlier, a layer is not required to intercept all instance or device functions. It can instead choose to intercept only a subset of those functions.

When a layer intercepts a particular Vulkan function, it generally calls down the instance or device call chain as required. The loader, along with all layer libraries that participate in a call chain, work together to ensure the correct sequencing of calls from one entity to the next. This coordinated effort for call chain sequencing is known as distributed dispatch. Under distributed dispatch, each layer is responsible for correctly calling the subsequent entity in the call chain. Consequently, a dispatch mechanism is necessary for all Vulkan functions that a layer intercepts.

For example, if only specific instance functions were intercepted by the enabled layers, then the instance call chain would appear as follows:

Likewise, if only specific device functions were intercepted by the enabled layers, then the device call chain would appear as follows:

The loader is responsible for dispatching all core and instance extension functions to the first entity in the call chain.

If you’re curious about the dispatch mechanism that allows a layer to know the next entity in the call chain, along with the address of an intercepted Vulkan function in the next entity. Well, long story short, the loader is aware of all the enabled layers beacause this information is required before calling vkCreateInstance. Additionally, each layer must intercept vkCreateInstance, and provide implementations for vkGetInstanceProcAddr and vkGetDeviceProcAddr, which return the addresses of intercepted functions in (the shared module\library of) the layer. These two functions must also be exported symbols in (the shader module\library of) the layer, allowing the loader to get their addresses to create a linked list where each element stores the addresses of both these functions for each layer in the call chain.

When an application calls vkCreateInstance, the corresponding function in the loader is invoked. During initialization, the loader creates the linked list and stores it in a field of a structure passed as an input parameter to vkCreateInstance. Then, the loader uses the first element of the linked list to retrieve the address of vkGetInstanceProcAddr in the first entity of the call chain, and uses this address to build its own dispatch table, where each element is the address of a function intercepted in the first entity. At that point, the loader pops the first element off the front of the linked list and calls vkCreateInstance into the first entity, passing the same arguments it received in input by the application. The implementation of vkCreateInstance in the first entity performs the same task as the loader: it uses the first element in the linked list to retrieve the address of vkGetInstanceProcAddr in the second entity of the call chain, and uses this address to build its own dispatch table, where each element is the address of a function intercepted in the second entity. At that point the first entity pops off the front of the linked list, and calls the vkCreateInstance of the third entity. And so on.

Now, what happens if a layer doesn’t intercept a function? In other words, what address should return vkGetInstanceProcAddr for non-intercepted functions? Well, in that case a layer can use the address of vkGetInstanceProcAddr in the next entity to forward the call down the call chain. Observe that vkCreateInstance is the first function invoked in a layer, so that the address of vkGetInstanceProcAddr in the next entity is always available for a layer when other Vulkan functions are invoked in the call chain.

Implementing vkGetDeviceProcAddr and vkCreateDevice in a layer follows almost the same pattern as the instance versions, with the layers on a different call chain, although - the device call chain instead of the instance call chain).

Additional information can be found in [2].

The loader supports filter environment variables that can forcibly enable and disable the layers that are already detected by the loader based on default search paths and other environment variables. The filter variables are compared against the layer name provided in the layer’s manifest file.

Meta-layers consist of a collection of layers that are arranged in a specific order to ensure proper interaction between them. This approach was initially used to group Vulkan Validation layers together in a specific order to prevent conflicts. The reason for this was that validation layers was divided into multiple component layers. However, the new validation layer pull everything into a single layer, thereby eliminating the need for meta-layers.

1.3.4 - Vulkan Configurator

VkConfig is a tool included in the Vulkan SDK that uses meta-layers to group layers together based on user’s preferences. It can be used to find layers, enable or disable them, change layer settings, and other useful features.

VkConfig creates three configuration files, two of which are intended to operate in conjunction with the Vulkan loader and layers. These files are:

- The Vulkan Override Layer

- The Vulkan Layer Settings File

- VkConfig Configuration Settings

The “Override Layer” is a special implicit meta-layer created by VkConfig, and available by default when the tool is running. Once VkConfig exits, the override layer is removed, and the system should return to standard Vulkan behavior. Whenever the override layer is present, the loader will pull it into the layer call stack along with all (implicit and explicit) layers to load. This layer forces the loading of the desired layers that were enabled inside of VkConfig, and disables those layers that were intentionally disabled (including implicit layers).

The Vulkan Layer Settings file can be used to tell each enabled layer which settings to use. Per-layer settings are loaded by each layer library and stored in the vk_layer_settings.txt file. This file is either located next to the Vulkan application executable or set globally and applied to all Vulkan applications thanks to Vulkan Configurator.

The VkConfig Configuration Settings file stores the application settings for VkConfig.

The Vulkan Configurator does not make any system-wide changes to a system, but it does make user-specific changes.

1.3.5 - Vulkan Drivers

A module that implements the Vulkan specification, either through supporting a physical hardware device directly, converting Vulkan commands into native graphics commands (like MoltenVK for macOS and iOS), or simulating Vulkan through software, is considered “a driver”. The most common type of driver is the Installable Client Driver (or ICD). These are drivers that are provided by hardware vendors to interact with the hardware they provide.

The loader is responsible for discovering available Vulkan drivers on the system. Given a list of available drivers, the loader can enumerate all the available physical devices and provide this information to applications.

Vulkan allows multiple ICDs, each supporting one or more devices. Each of these devices is represented by a VkPhysicalDevice object. The loader is responsible for discovering available Vulkan ICDs on the system.

2 - GUI applications

In order to develop graphics applications, it is necessary to create a window for rendering. However, before proceeding, it is required to have a basic understanding of how applications with a graphical user interface (GUI) operate on both Windows and Linux.

2.1 - Windows applications

The content of this section has been heavily inspired by “Programming Microsoft Visual C++, Fifth Edition” by David J. Kruglinski, George Shepherd and Scott Wingo.

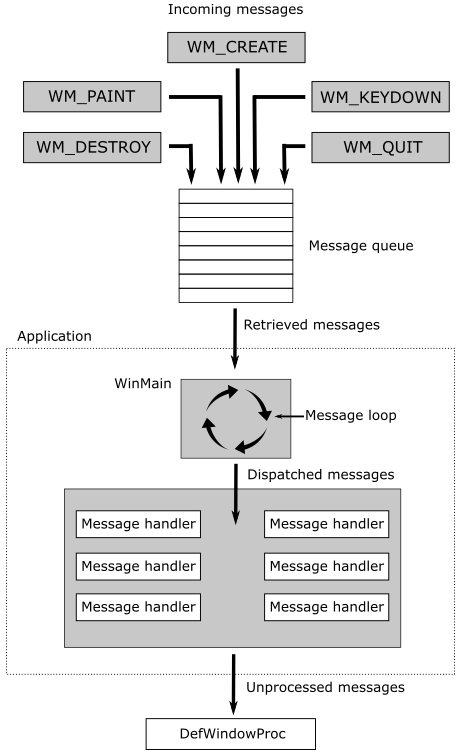

Windows applications use an event-driven programming model (illustrated in the following image) in which programs respond to events by processing messages sent by the operating system. In this context, an event is a keystroke, a mouse click, or a command for a window to repaint itself. The entry point of a Windows application is a function called WinMain, but most of the action takes place in a function known as the window procedure. The window procedure processes messages sent by the OS to the application a window belongs to. WinMain creates that window and then enters a message loop, retrieving messages and dispatching them to the window procedure. Messages wait in a message queue until they are retrieved. The main occupation of a Windows application is to respond to the messages it receives, and in between messages, it does little except wait for the next message to arrive. You exit the message loop when a WM_QUIT message is retrieved from the message queue, signaling that the application is about to end. This message is sent by the OS when the user closes the window. When the message loop ends, WinMain returns, and the application terminates.

Observe that window messages can also be directly sent to a window procedure, bypassing the message queue. If the sending thread is sending a message to a window created by the same thread, the specified window’s window procedure is called. However, if a thread is sending a message to a window created by another thread, things become more complicated. Fortunately, we don’t need to know the low-level details right now.

2.1.1 - Window Procedure

As stated earlier, a window procedure is a function that receives and processes messages sent by the OS to the application a window belongs to. A window class defines important characteristics of a window such as its window procedure address, its default background color, and its icon. Every window created with a particular class will use that same window procedure to respond to messages.

When the application dispatches a message to a window procedure, it also passes additional information on the message as arguments in its input parameters. That way, the window procedure can perform an appropriate action for a message by consuming the related message data. If a window procedure does not process a message, it must send the message back to the system for default processing by calling the DefWindowProc function, which performs a default action and returns a message result. The window procedure must then return this value as its own message result.

Since a window procedure is shared by all windows belonging to the same class, it can process messages for different windows. To identify the specific window a message is addressed to, a window procedure can examine the window handle passed as input parameter. The code provided in the window procedure to process a particular message is known as message handler.

2.1.2 - Messages

Windows defines many different message types. Usually, messages have names that begin with the letters “WM_”, as in WM_CREATE and WM_PAINT. The following table shows ten of the most common messages. For example, a window receives a WM_PAINT message when its interior needs repainting. You can think of a Windows program as a collection of message handlers.

| Message | Sent when |

|---|---|

| WM_CHAR | A character is input from the keyboard. |

| WM_COMMAND | The user selects a menu item, or a control sends a notification to its parent. |

| WM_CREATE | A window is created. |

| WM_DESTROY | A window is destroyed. |

| WM_LBUTTONDOWN | The left mouse button is pressed. |

| WM_LBUTTONUP | The left mouse button is released. |

| WM_MOUSEMOVE | The mouse pointer is moved. |

| WM_PAINT | A window needs repainting. |

| WM_QUIT | The application is about to terminate. |

| WM_SIZE | A window is resized. |

When the message loop dispatches a message, the window procedure is called, and you can retrieve the information on the message from its four input parameters:

-

The handle of the window to which the message is directed,

-

A message ID, and

-

Two 32-bit parameters known as wParam and lParam.

The window handle is a 32-bit value that uniquely identifies a window. Internally, the value references a data structure in which the OS stores relevant information about the window such as its size, style, and location on the screen.

The message ID is a numeric value that identifies the message type: WM_CREATE, WM_PAINT, and so on.

wParam and lParam contain information specific to the message type. For example, when a WM_LBUTTONDOWN message arrives, wParam holds a series of bit flags identifying the state of the Ctrl and Shift keys and of the mouse buttons. lParam holds two 16-bit values identifying the location of the mouse pointer (in screen coordinates) when the click occurred. At that point, you have all you need to know to process the WM_LBUTTONDOWN message in the window procedure. Conventionally, WinMain should return the value stored in the wParam of the WM_QUIT message.

The only criticism to the above explanation is that a graphics application performs the bulk of its processing exactly in between messages. Although, the sample we will examine in this tutorial is an exception as its only purpose is to show a window on the screen (i.e., no relevant graphics operations are involved).

2.1.3 - How to create a window

The following listing demonstrates how to create and show a window on the screen.

#include <windows.h>

int WINAPI WinMain(HINSTANCE hInstance, HINSTANCE, char*, int nCmdShow)

{

ApplicationClass* pApp();

// Size of the client area

uint32_t width = 1280;

uint32_t height = 1280;

// Initialize the window class.

WNDCLASSEX windowClass = { 0 };

windowClass.cbSize = sizeof(WNDCLASSEX);

windowClass.style = CS_HREDRAW | CS_VREDRAW;

windowClass.lpfnWndProc = WindowProc;

windowClass.hInstance = (HINSTANCE)hInstance;

windowClass.hCursor = LoadCursor(NULL, IDC_ARROW);

windowClass.lpszClassName = "ClassName";

RegisterClassEx(&windowClass);

RECT windowRect = { 0, 0, width, height };

AdjustWindowRect(&windowRect, WS_OVERLAPPEDWINDOW, FALSE);

// Create the window and store a handle to it.

winParams.hWindow = CreateWindow(

windowClass.lpszClassName,

"Window name",

WS_OVERLAPPEDWINDOW,

CW_USEDEFAULT,

CW_USEDEFAULT,

windowRect.right - windowRect.left,

windowRect.bottom - windowRect.top,

nullptr, // We have no parent window.

nullptr, // We aren't using menus.

(HINSTANCE)hInstance,

pApp);

// Show the window

ShowWindow(winParams.hWindow, nCmdShow);

// Enter the message loop

return ApplicationClass::MessageLoop();

}

To create a window, we first need an instance of a window class (structure WNDCLASSEX) to specify some basic information about all the windows created using that instance. Below is a list of the most important fields of WNDCLASSEX.

style specifies some additional information about the window. CS_HREDRAW | CS_VREDRAW indicates to redraw the entire window if a size adjustment changes the width and\or height of the client area.

hCursor specifies the cursor showed when this is over the window’s client area.

hInstance specifies the application a window belongs to. This information is passed as an argument to the first parameter of WinMain.

lpszClassName specifies the name we want to give to the window class.

lpfnWndProc specifies the address of the window procedure.

RegisterClassEx registers the window class so that we can use an instance of this class to create one or more windows with a specific style, window procedure, etc.

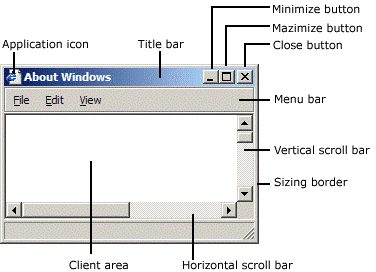

CreateWindow, as the name suggests, creates a window and returns its handle. It takes the name of a window class and some additional information. In particular, it needs the size of the entire window, so we must calculate it because in graphics application we tipically set the size of the window’s client area where we want to draw rather than the size of the whole window area. The client area of a window is where we are allowed to draw.

AdjustWindowRect returns such information if you pass the size of the client area and the style of the window you’re going to create with CreateWindow. WS_OVERLAPPEDWINDOW specifies a window with a title bar and no menu.

With the last parameter of CreateWindow we can specify a pointer the OS will return to us in response to a WM_CREATE message (sent by the OS to an application as soon as a window is created; that is, when CreateWindow returns). We will use this last parameter to save an instance of the application class with the purpose to access it later. The following listing shows an example of a window procedure.

// Main message handler for the application.

LRESULT CALLBACK WindowProc(HWND hWnd, UINT message, WPARAM wParam, LPARAM lParam)

{

ApplicationClass* pApp = reinterpret_cast<ApplicationClass*>(GetWindowLongPtr(hWnd, GWLP_USERDATA));

switch (message)

{

case WM_CREATE:

{

// Save the ApplicationClass pointer passed in to CreateWindow.

LPCREATESTRUCT pCreateStruct = reinterpret_cast<LPCREATESTRUCT>(lParam);

SetWindowLongPtr(hWnd, GWLP_USERDATA, reinterpret_cast<LONG_PTR>(pCreateStruct->lpCreateParams));

}

return 0;

case WM_PAINT:

if (pApp && pApp->IsInitialized())

{

pApp->OnUpdate();

pApp->OnRender();

}

return 0;

case WM_KEYDOWN:

switch (wParam)

{

case VK_ESCAPE:

PostQuitMessage(0);

break;

}

if (pApp)

{

pApp->OnKeyDown(static_cast<UINT8>(wParam));

}

return 0;

case WM_KEYUP:

if (pApp)

{

pApp->OnKeyUp(static_cast<UINT8>(wParam));

}

return 0;

case WM_DESTROY:

PostQuitMessage(0);

return 0;

}

// Handle any messages the switch statement didn't.

return DefWindowProc(hWnd, message, wParam, lParam);

}

Before returning, CreateWindow sends a WM_CREATE message to the window procedure. In the WM_CREATE message handler, lParam is a pointer to CREATESTRUCT. The lpCreateParams field of this structure contains the last parameter passed to CreateWindow. This means we can call SetWindowLongPtr to save the instance of the application class in the user data associated with the window (an extra memory space reserved to the user), and retrieve it with GetWindowLongPtr later.

A WM_DESTROY is sent to the window procedure of the window being destroyed after the user closes it. The WM_DESTROY message handler calls PostQuitMessage, which queues a WM_QUIT message. That way, we can exit the message loop (more on this shortly).

Generally, WM_PAINT messages are both sent to the window procedure, and posted to the message queue throughout the application’s lifetime. That way, we can use the WM_PAINT message handler for updating and rendering purposes.

At the end of WinMain we call MessageLoop, and the application enters a message loop where PeekMessage retrieves a message from the message queue, and save the related information in the MSG structure passed in the first parameter before returning TRUE (otherwise it returns FALSE to indicate no message were available). DispatchMessage dispatches a message to the window procedure. TranslateMessage translates virtual-key messages (WM_KEYDOWN, WM_KEYUP) into character messages (WM_CHAR) containing ASCII characters. That way you can better distinguish the various keys of the keyboard.

int ApplicationClass::MessageLoop()

{

// Message loop

MSG msg;

bool quitMessageReceived = false;

while (!quitMessageReceived)

{

if (PeekMessage(&msg, NULL, 0, 0, PM_REMOVE))

{

TranslateMessage(&msg);

DispatchMessage(&msg);

if (msg.message == WM_QUIT) {

quitMessageReceived = true;

break;

}

}

}

// Return this part of the WM_QUIT message to Windows.

return static_cast<char>(msg.wParam);

}

2.2 - Linux applications

The content of this section has been heavily inspired by “Xlib Programming Manual, for version 11” by Adrian Nye, as well as additional information gathered from Wikipedia and other online resources.

On linux we have different kernel versions, display servers, windows managers, communication protocols, and protocol client libraries. In this section, I will just explain the necessary requirements for creating a window using the Xlib library, which is easier and cleaner to use for our purposes (while I am aware that Xlib has some limitations and drawbacks, they are not significant within the scope of this tutorial series, which is solely intended to provide guidance on programming with the Vulkan API).

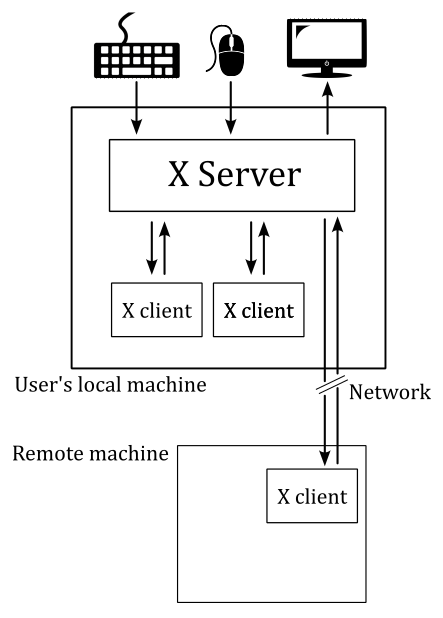

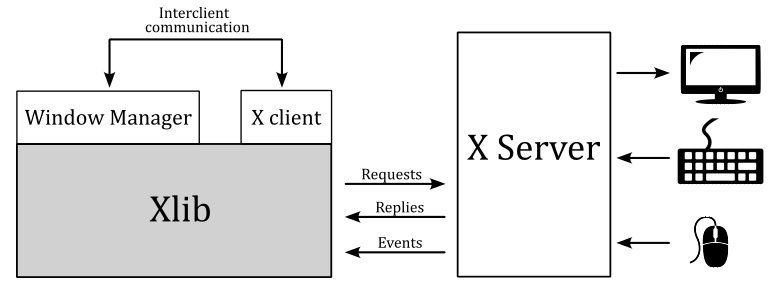

2.2.1 - X Window System

The X Window System (X11, or simply X) is a windowing system based on a client-server model that provides basic GUI support for creating and moving windows on the display device, and interacting with a mouse and keyboard. It also supports basic drawing of graphical primitives. This model has a main X server providing a display service that clients can interact with, even over a network. This means the server and its clients need to communicate via a network-transparent protocol. For this purpose, the X protocol makes the network transparent, so that the server and its clients may run on the same machine or different ones (including across various architectures and operating systems).

The X Window System mainly defines a protocol (called X protocol) and some graphics primitives. It contains no specification for application user-interface design, such as button, menu, or window title-bar styles. Rather, the responsibility of defining and supplying these details falls on application software such as window managers, GUI widget toolkits and desktop environments, or application-specific graphical user interfaces. As a result, there is no typical X interface, and several different desktop environments have become popular among users.

The X Window System uses certain terms in a specific manner that sometimes can diverge from their common usage - particularly “display” and “screen”. The following is a subset of the common used terms, presented for convenience:

device: A graphics device such as a computer graphics card or a computer motherboard’s integrated graphics chipset.

monitor: A physical device such as a CRT or a flat screen computer display.

screen: An area into which graphics may be rendered, either into system memory or within a graphics device.

display: A collection of screens, even involving multiple monitors, generally configured to allow the mouse to move the pointer to any position within them.

2.2.2 - Xlib

Xlib (also known as libX11) is an X Window System protocol client library written in C language. It contains functions for interacting with an X server. These functions allow programmers to write applications without knowing the details of the X protocol: Xlib calls are automatically translated to X protocol requests sent to the server.

In the context of the X Window System, a window manager is a special type of X client that controls the placement and appearance of windows to help provide them a desktop environment. It typically decorate the windows with a title bar and various tools for iconifying and resizing applications. Much of the communication between clients and the window manager (and vice versa) occurs through properties (the rest occurring through events). Many of the properties are known as hints because they may not necessarily be honored by the window manager.

The Xlib functions that send requests to the server, typically do not immediately do it. Instead, they store the requests in a buffer referred to as the request buffer. The request buffer may contain a variety of requests to the server, not only those that have a visible effect on the screen. The request buffer is guaranteed to be flushed (which means that all pending requests are transmitted to the server) after a call to either the XSync or XFlush functions, after a function call that returns a value from the server (these functions block until a response is received), or under certain conditions.

For each application, the X server provides a queue where it stores events that are generated for any of the application’s windows. These events are dispatched by the X server asynchronously in the appropriate queue. Events include user input (key press, mouse click and movements over a window, or window resizing) as well as interaction with other programs (for example, if an obscured portion of a window is exposed when another overlapping window is moved, closed, or resized, the client must redraw it).

Client applications can inspect and retrieve events from the event queue by calling specific Xlib functions. Some of these functions may block, in which case they also flush the request buffer. Errors are instead received and treated asynchronously: applications can provide an error handler that will be called whenever an error message from the server is received.

The content of a window is not guaranteed to be preserved if the window or part of it is made not visible. If this occurs, the application is sent an Expose event when the invisible region of the window is made visible again. The aplication is then supposed to draw the window content again.

The functions in the Xlib library can be grouped in:

-

connection operations (XOpenDisplay, XCloseDisplay, …)

-

requests to the server, including requests for operations (XCreateWindow, XSetWindowProperty, …) and requests for information (XGetWindowProperty, …)

-

operations that are local to the client: operations on the event queue (XNextEvent, XPeekEvent, …) and other operations on local data (XLookupKeysym, XParseGeometry, XSetRegion, XCreateImage, XSaveContext, …)

In Xlib, the primary data types include the ‘Display’ structure and several identifiers.

The Display structure of the Xlib library not only provides information about the display, but also contains significant details regarding the communication channel between the client and the server. On Unix-like operating systems, this structure includes the file handle of the socket of this channel. Most Xlib functions require a Display structure as an argument because they either operate on the channel or are relative to a specific channel. In particular, all Xlib functions that interact with the server need this structure for accessing the channel. Some other functions need this structure, even if they operate locally, as they operate on data relative to a specific channel. Operations of this kind include for example operations on the event queue.

Windows, colormaps, and other similar objects are managed by the server, which means that the implementation details are all stored in the server. The client can only interact with these objects through their identifiers. It is not possible for the client to directly manipulate an object, but it can request the server to perform operations on the object by specifying its identifier.

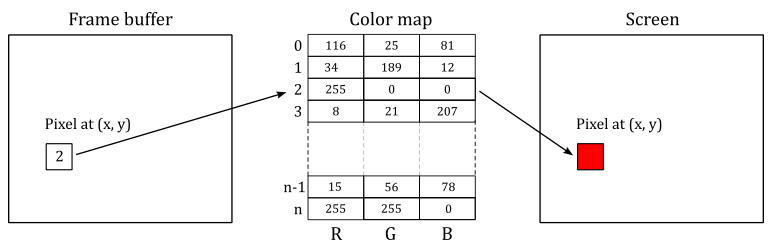

A colormap is a lookup table used to map pixel values from a frame buffer (a memory buffer containing data representing all the pixels in a complete video frame) to pixel colors at the corresponding location on the screen.

The types Windows, Pixmap, Font, Colormap, etc. are all identifiers, which are 32-bit integers. To create a window, a client sends a request to the server via an Xlib function call. The server responds with a unique identifier for the new window. The client can then use this identifier to perform additional operations on the same window, such as resizing or moving it.

Every window has a predefined set of attributes and a set of properties, all stored in the X server and accessible to the clients via appropriate requests. Attributes are data about the appearance and response of a window, such as its size, position, background color, which event types are received, etc. Properties are pieces of data that are attached to a window.

You can think of properties as global variables associated with a particular window and made available to all clients running under a server. Properties are used by clients to store information that other clients, including the window manager, might need or want to know.

Properties have a string name and a numerical identifier called atom. An atom is an ID that uniquely identifies a particular property where clients can store arbitrary data, usually to inform the window manager about its preferences. For example, the WM_NAME property is used to store the name of a window, which is typically displayed by the window manager at the top of the window. Therefore, a client can use the atom corresponding to WM_NAME to set a string that the window manager will read to set the window title.

Property name strings are typically all upper case, with words separated by underscores, such as WM_COLORMAP_WINDOWS. Atoms are used to refer to properties in function calls. This avoid the need to send arbitrary-length property name strings over the network, that it’s easier and reduce network traffic. An application gets the atom for a property by calling XInternAtom. You can specify the string name for a property as an argument to XInternAtom, and it returns the atom. From this point on, the application can use the atom to refer to that property.

Some atoms, called predefined atoms, are defined when the server initializes. These atoms are available as symbolic constants starting with XA_ and can be used directly by applications without the need to call the XInternAtom function.

XSetStandardProperties and XSetWMProperties can be used to give the window manager some information about your window’s preferences. In particular, these functions can set some essential window properties that are required for a typical application. For example, the window title (WM_NAME property), the icon, and the size hints for the window in its normal state.

The WM_PROTOCOLS property is used to enable applications to receive notifications of specific events or conditions. It contains a list of atoms (that is, it is a property that can refer to other properties through its atoms), each identifying a protocol that represent a condition the application want to be notified with a ClientMessage event. By registering for a protocol, an application can indicate that it is capable of handling certain events, such as window close or iconify requests, from the window manager. The xclient field of the event will contain the atoms for both the WM_PROTOCOLS property and one of the properties listed below

- WM_TAKE_FOCUS: Assignment of keyboard focus.

- WM_SAVE_YOURSELF: Save client state warning.

- WM_DELETE_WINDOW: Request to delete top−level window.

The NET_WM_STATE property is also a list of atoms, each describing a specific window state although. For example, NET_WM_STATE_FULLSCREEN is the property that correspond to an atom included in NET_WM_STATE that specify a window in full screen.

Unlike properties, window attributes are defined in regular structures. In particular, the XWindowAttributes and XSetWindowAttributes structures include information about about how a window is to look and act. The window attributes defined in XSetWindowAttributes can be set by calling XChangeWindowAttributes. On the other hand, XWindowAttributes is a read-only structure, and to change the window attributes associated to its field we need to using specific Xlib functions. For example, the window position can be set by calling XMoveWindow that inform the window manager how to move a top-level window. In the same way, the functions to resize a top-level window are XMoveWindow, XMoveResizeWindow, and XResizeWindow, while the function to change the border width of a window is XSetWindowBorderWidth.

However, it is not essential that you set any window attributes other than the window background and border. Therefore, if you need more information, refer to the Xlib documentation.

If you want to set the position of a window, remember that you need to distinguish between the coordinate system of the parent window, and the coordinate system of the root window (the screen). Fortunately, XTranslateCoordinates allows to translate coordinates in one window to the coordinate space of another window (more on this shortly).

2.2.3 - How to create a window

The following listing demonstrates how to create and show a window on the screen using the Xlib library.

#include <X11/Xlib.h>

#include <X11/Xutil.h>

#include <X11/Xatom.h>

Display* pDisplay = nullptr;

Atom wm_delete_window = 0;

bool quit = false;

int main(const int argc, const char* argv[])

{

ApplicationClass* pApp();

// Size of the client area

uint32_t width = 1280;

uint32_t height = 720;

// Check if DISPLAY is set as an environment variable, and stores a valid value.

const char *display_envar = getenv("DISPLAY");

if (display_envar == nullptr || display_envar[0] == '\0') {

printf("Environment variable DISPLAY requires a valid value.\nExiting ...\n");

fflush(stdout);

exit(1);

}

// Open a connection to the X server

pDisplay = XOpenDisplay(nullptr);

// Create the window

unsigned long white = WhitePixel(pDisplay, DefaultScreen(pDisplay));

Window win = XCreateSimpleWindow(pDisplay, DefaultRootWindow(pDisplay), 0, 0, width, height, 0, white, white);

// Set the event types the window wants to be notified by the X Server.

XSelectInput(pDisplay, win, KeyPressMask | KeyReleaseMask);

// Also request to be notified when the window is deleted.

Atom wm_protocols = XInternAtom(pDisplay, "WM_PROTOCOLS", true);

wm_delete_window = XInternAtom(pDisplay, "WM_DELETE_WINDOW", true);

XSetWMProtocols(pDisplay, win, &wm_delete_window, 1);

// Set window and icon names

XSetStandardProperties(pDisplay, win, "Window name", "Icon name", None, nullptr, 0, nullptr);

// Setup the Size Hints with the minimum window size.

XSizeHints sizehints;

sizehints.flags = PMinSize;

sizehints.min_width = 640;

sizehints.min_height = 360;

// Tell the Window Manager our hints about the minimum window size.

XSetWMSizeHints(pDisplay, win, &sizehints, XA_WM_NORMAL_HINTS);

// Request to display the window on the screen, and flush the request buffer.

XMapWindow(pDisplay, win);

XFlush(pDisplay);

// Enter the event loop

return ApplicationClass::EventLoop();

}

XOpenDisplay connect the client to the X server through the channel contained in the Display structure. XOpenDisplay takes the display name as its first parameter, but you can also pass nullptr so that it defaults to the value of the DISPLAY environment variable. That’s why we check if DISPLAY is set as an environment variable, and stores a valid value.

XCreateSimpleWindow creates an unmapped child window for a specified parent window, returns the window ID of the created window, and causes the X server to generate a CreateNotify event. DefaultRootWindow returns the root window (which fills the entire screen) of the default screen for the display passed as a parameter. The position coordinates are expressed relative to the parent window (in this case, the root window). WhitePixel returns the pixel value that maps to white using the default colormap for the specified screen: we use this value as the background and borded colors of the window.

Almost certainly the window manager will ignore the position coordinates passed to XCreateSimpleWindow. Therefore, if you need to create a window at a specific position, first you must translate the $(0,0)$ position of the window (passed as a parameter to XCreateSimpleWindow) to a screen position by calling XTranslateCoordinates. At that point, you can call XMoveWindow to specify a new location for the window.

XSelectInput sets the types of events the window wants to be notified by the X Server. In this case, we are interested in key press and release. We are also interested in being notified with a ClientMessage event when the window is closed. That’s why we use XSetWMProtocols to set the WM_DELETE_WINDOW property through the array of atoms included in WM_PROTOCOLS.

The window name displayed in the title bar can be set by calling XSetStandardProperties, which also sets the string displayed when the client is iconified.

XSetWMSizeHints tells the window manager our hints about the look of a window. In this case we want to set a minimum size for our window, which means we need to set the WM_NORMAL_HINTS property, that maps to a XSizeHints structure. The flags field of this structure specifies the fields in the structure we are going to set.

XMapWindow maps the window. That is, it requests to display the window on the screen. XFlush flushes the request buffer.

At the end of the main function we call EventLoop, which enters the event loop.

int ApplicationClass::EventLoop()

{

// Event loop

while (!quit)

{

XEvent event;

while ((XPending(pDisplay) > 0))

{

XNextEvent(pDisplay, &event);

HandleX11Event(event);

}

if (!quit && IsInitialized())

{

OnUpdate();

OnRender();

}

}

return 0;

}

The application will continue to process events from the event queue as long as there are events pending. Once the event queue is empty, the application can perform updating and rendering operations.

XPending returns the number of events that have been received from the X server but have not been removed from the event queue. This means that when the event queue is empty, the application can move on to other tasks, such as updating an animation and rendering a new frame on the screen.

XNextEvent copies the first event from the event queue into the specified XEvent structure and then removes it from the queue. If the event queue is empty, XNextEvent flushes the request buffer and blocks until an event is received.

HandleX11Event is our event handler.

void HandleX11Event(XEvent& event)

{

ApplicationClass* pApp = VKApplication::GetApplication();

switch (event.type)

{

case ClientMessage:

if ((Atom)event.xclient.data.l[0] == wm_delete_window)

{

quit = true;

}

break;

case KeyPress:

{

switch (event.xkey.keycode)

{

case 0x9: // Escape

quit = true;

break;

}

if (pApp)

{

pApp->OnKeyDown(static_cast<uint8_t>(event.xkey.keycode));

}

}

break;

case KeyRelease:

{

if (pApp)

{

pApp->OnKeyDown(static_cast<uint8_t>(event.xkey.keycode));

}

}

break;

default:

break;

}

}

In this case, we are calling specific application functions to handle key press and release events.

Also, we check the xclient field of the event to verify that it contains the ID of the atom associated with the WM_DELETE_WINDOW property. In that case, we set quit to true in order to exit the event loop.

Observe that data is a field of xclient, and it is defined as a union of 160-bit values where l represents an array of five long (32-bit) values. Also, the type field of xclient will contain the ID of the atom associated with the WM_PROTOCOLS property.

3 - Framework overview

The framework presented in this section is common to almost any samples we will review in the upcoming tutorials. This means that, by the end of this tutorial, you will know how to write a generic Vulkan application (or at least the backbone of a complete Vulkan application).

The following listing show the entry point of our Vulkan applications.

#include "stdafx.h"

#include "VKApplication.hpp"

#include "VKHelloWindow.hpp"

#if defined (_WIN32)

_Use_decl_annotations_

int WINAPI WinMain(HINSTANCE hInstance, HINSTANCE, char*, int nCmdShow)

{

for (size_t i = 0; i < __argc; i++)

{

VKApplication::GetArgs()->push_back(__argv[i]);

};

VKHelloWindow sample(1280, 720, "VK Hello Window");

VKApplication::Setup(&sample, true, hInstance, nCmdShow);

return VKApplication::RenderLoop();

}

#elif defined (VK_USE_PLATFORM_XLIB_KHR)

int main(const int argc, const char* argv[])

{

for (int i = 0; i < argc; i++)

{

VKApplication::GetArgs()->push_back(argv[i]);

};

VKHelloWindow sample(1280, 720, "VK Hello Window");

VKApplication::Setup(&sample, true);

return VKApplication::RenderLoop();

}

#endif

WinMain is the Windows entrypoint, while main is the Linux entrypoint. Both are called by the C/C++ runtime startup and takes some parameters.

On Windows, we are only interested in two of the four parameters passed to WinMain (the named ones).

hInstance is the base virtual address of the executable loaded in memory.

nCmdShow is an integer value that controls how to show the window we are going to create. As shown earlier in section 2.1.3, this parameter is passed as an argument to ShowWindow, which activates the window and displays it on the screen.

On Linux, main takes the number of command-line arguments entered by the user after the name of the Vulkan application, and an array of null-terminated strings representing the actual argument list.

The header file stdafx.h includes others platform-specific header files, as well as headers for using functionality from the C/C++ standard library.. Most importantly, it includes vulkan.h that provides the functions, enumerations and structures defined in the Vulkan specification.

#ifdef _WIN32

#include <windows.h>

#include <shellapi.h>

#elif defined(VK_USE_PLATFORM_XLIB_KHR)

#include <X11/Xlib.h>

#include <X11/Xutil.h>

#include <X11/Xatom.h>

#endif

#include <iostream>

#include <assert.h>

#include <string>

#include <vector>

#include <array>

#include <string.h>

#include <algorithm>

#include "vulkan.h"

The class VKHelloWindow is derived from VKSample, and defines data and methods required for a specific Vulkan sample.

#include "VKSample.hpp"

class VKHelloWindow : public VKSample

{

public:

VKHelloWindow(uint32_t width, uint32_t height, std::string name);

virtual void OnInit();

virtual void OnUpdate();

virtual void OnRender();

virtual void OnDestroy();

private:

void InitVulkan();

void SetupPipeline();

void PopulateCommandBuffer(uint32_t currentBufferIndex, uint32_t currentIndexImage);

void SubmitCommandBuffer(uint32_t currentBufferIndex);

void PresentImage(uint32_t imageIndex);

uint32_t m_commandBufferIndex = 0;

};

The base class VKSample defines data and methods used by all Vulkan samples.

class VKSample

{

public:

VKSample(uint32_t width, uint32_t height, std::string name);

virtual ~VKSample();

virtual void OnInit() = 0;

virtual void OnUpdate() = 0;

virtual void OnRender() = 0;

virtual void OnDestroy() = 0;

virtual void WindowResize(uint32_t width, uint32_t height);

// Samples override the event handlers to handle specific messages.

virtual void OnKeyDown(uint8_t /*key*/) {}

virtual void OnKeyUp(uint8_t /*key*/) {}

// Accessors.

uint32_t GetWidth() const { return m_width; }

void SetWidth(uint32_t width) { m_width = width; }

uint32_t GetHeight() const { return m_height; }

void SetHeight(uint32_t height) { m_height = height; }

const char* GetTitle() const { return m_title.c_str(); }

const std::string GetStringTitle() const { return m_title; }

const std::string GetAssetsPath() const { return m_assetsPath; };

const std::string GetWindowTitle();

void SetAssetsPath(std::string assetPath) { m_assetsPath = assetPath; }

uint64_t GetFrameCounter() const{ return m_frameCounter; }

bool IsInitialized() const { return m_initialized; }

protected:

virtual void CreateInstance();

virtual void CreateSurface();

virtual void CreateSynchronizationObjects();

virtual void CreateDevice(VkQueueFlags requestedQueueTypes);

virtual void CreateSwapchain(uint32_t* width, uint32_t* height, bool vsync);

virtual void CreateRenderPass();

virtual void CreateFrameBuffers();

virtual void AllocateCommandBuffers();

// Viewport dimensions.

uint32_t m_width;

uint32_t m_height;

float m_aspectRatio;

// Sentinel variable to check sample initialization completion.

bool m_initialized;

// Vulkan and pipeline objects.

VulkanCommonParameters m_vulkanParams;

SampleParameters m_sampleParams;

// Stores physical device properties (for e.g. checking device limits)

VkPhysicalDeviceProperties m_deviceProperties;

// Stores all available memory (type) properties for the physical device

VkPhysicalDeviceMemoryProperties m_deviceMemoryProperties;

// Stores the features available on the selected physical device (for e.g. checking if a feature is available)

VkPhysicalDeviceFeatures m_deviceFeatures;

// Frame count

StepTimer m_timer;

uint64_t m_frameCounter;

char m_lastFPS[32];

// Number of command buffers

uint32_t m_commandBufferCount = 0;

private:

// Root assets path.

std::string m_assetsPath;

// Window title.

std::string m_title;

};

SampleParameters and VulkanCommonParameters are defined as follow.

struct VulkanCommonParameters {

VkInstance Instance;

VkPhysicalDevice PhysicalDevice;

VkDevice Device;

QueueParameters GraphicsQueue;

QueueParameters PresentQueue;

VkSurfaceKHR PresentationSurface;

SwapChainParameters SwapChain;

VulkanCommonParameters() :

Instance(VK_NULL_HANDLE),

PhysicalDevice(VK_NULL_HANDLE),

Device(VK_NULL_HANDLE),

GraphicsQueue(),

PresentQueue(),

PresentationSurface(VK_NULL_HANDLE),

SwapChain() {

}

};

struct SampleParameters {

VkRenderPass RenderPass;

std::vector<VkFramebuffer> Framebuffers;

VkPipeline GraphicsPipeline;

VkPipelineLayout PipelineLayout;

VkSemaphore ImageAvailableSemaphore;

VkSemaphore RenderingFinishedSemaphore;

VkCommandPool GraphicsCommandPool;

std::vector<VkCommandBuffer> GraphicsCommandBuffers;

SampleParameters() :

RenderPass(VK_NULL_HANDLE),

Framebuffers(),

GraphicsCommandPool(VK_NULL_HANDLE),

GraphicsCommandBuffers(),

GraphicsPipeline(VK_NULL_HANDLE),

ImageAvailableSemaphore(VK_NULL_HANDLE),

RenderingFinishedSemaphore(VK_NULL_HANDLE) {

}

};

VKApplication defines data and methods used by all GUI/window applications.

struct Settings {

bool validation = false;

bool fullscreen = false;

bool vsync = false;

bool overlay = true;

};

struct WindowParameters {

#if defined(_WIN32)

HWND hWindow;

HINSTANCE hInstance;

#elif defined(VK_USE_PLATFORM_XLIB_KHR)

bool quit = false;

Display *DisplayPtr;

Window Handle;

Atom xlib_wm_delete_window = 0;

#endif

};

class VKApplication

{

public:

static void Setup(VKSample* pSample, bool enableValidation, void* hInstance = nullptr, int nCmdShow = 0);

static int RenderLoop();